Lab5: Process

0x00 The Concepts and Syscalls

It’s hard to define what a process is. Usually it is a procedure in which the OS selects an executable file and performs the dynamic execution. During the execution there will be many interaction between the process and the hardware or virtual resources, and we know those are handled by the OS through syscalls. Besides, there are some important syscalls specially made for process management: fork/exec/waitpid:

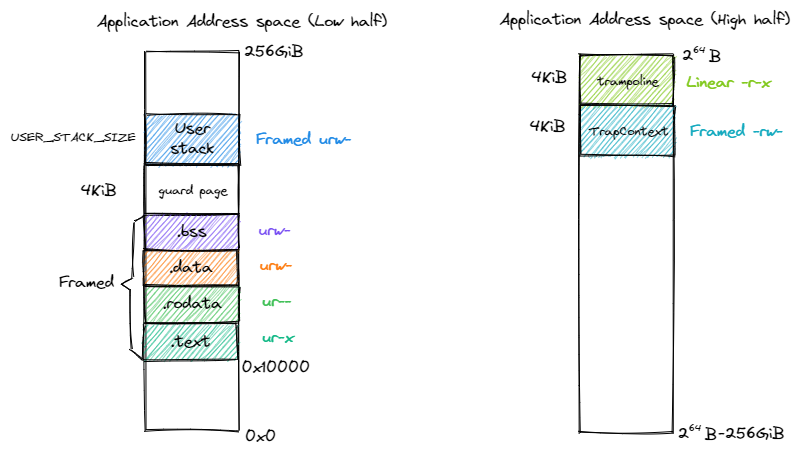

fork: When a process (let’s name it A) call fork, the kernel will create a new process (let’s name it B) which is almost identical to A: they have exactly same stack, .text segment or other data segment content, and every registers excepta0which stores the return value of the syscall. They are in different address spaces but the bytes stores in these address space are exactly same at the momentforkreturns. The only way a process can figure out whether it is the new process or the old parent is the returen value offork: 0 for the new born process andpidof child process for the parent. This parent-child relation is very important in unix-like OS.exec: This will help us run a new program in the current address space, use it together withforkwe can easily create a process that runs a new program.waitpid: When a process returns, the memory resources it have consumed cannot be fully recycled through theexitsyscall, for eaxmple the currentkernel stack. A typical solution for this is to mark the process aszombie, then it’s parent process do the rest recycle work and get the exit status through thewaitpidsyscall.

0x01 Data Structures for Process

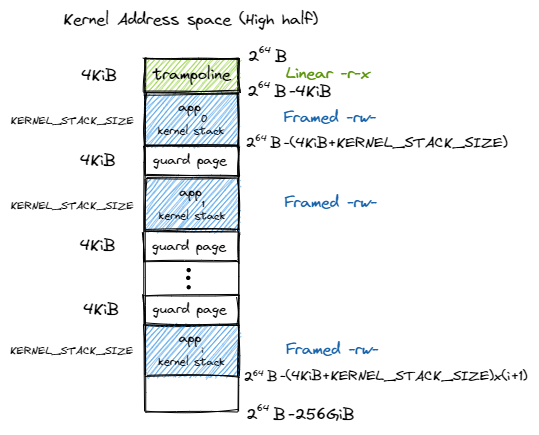

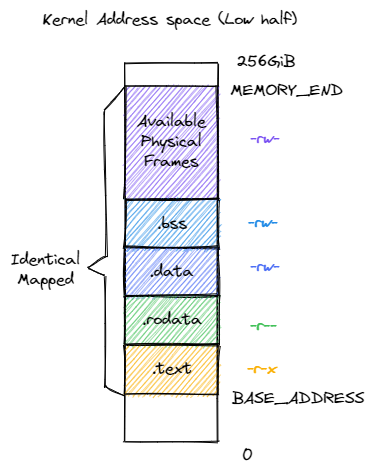

RAII is heavily used to help us safe memory management. For a process, we bind its pid, kernel stack, and address space(MemorySet) to a TaskControlBlock. The TCBs ares stored in a tree formed by the parent-child relations(created through fork&exec) between processes:

pub struct TaskControlBlock {

// immutable

pub pid: PidHandle,

pub kernel_stack: KernelStack,

// mutable

inner: UPSafeCell<TaskControlBlockInner>,

}

pub struct TaskControlBlockInner {

pub trap_cx_ppn: PhysPageNum,

pub base_size: usize,

pub priority: usize,

pub pass: usize,

pub task_cx: TaskContext,

pub task_status: TaskStatus,

pub memory_set: MemorySet,

pub parent: Option<Weak<TaskControlBlock> >,

pub children: Vec<Arc<TaskControlBlock> >,

pub exit_code: i32,

}

Here we use alloc::sync::Weak to wrap the parent pointer so that there will be no cyclic refernces between the parent and it’s child.

Another significant modification from previous chapter is that we split the original task manager module into Processor and TaskManager.

– The Processor module maintains the CPU’s states, including current process and idle task context. In a single-core CPU environment, we only create one gloable instance of Processor.

– The TaskManager stores all Arcs in a BTreeMap, so that we can easily fetch/remove or add/insert tasks(processes) with our scheduler.

pub struct TaskManager {

ready_queue: BTreeMap<usize, Arc<TaskControlBlock>>,

scheduler: Box<Scheduler>,

}

impl TaskManager {

pub fn new() -> Self {

let stride_scheduler: Scheduler = Scheduler::new();

Self {

ready_queue: BTreeMap::new(),

scheduler: Box::new(stride_scheduler),

}

}

pub fn add(&mut self, task: Arc<TaskControlBlock>) {

// update pass for stride scheduler

let mut task_inner = task.inner_exclusive_access();

task_inner.pass += BIG_STRIDE / task_inner.priority;

drop(task_inner);

self.scheduler.insert_task(task.getpid(), task.clone().inner_exclusive_access().pass);

self.ready_queue.insert(task.getpid(), task);

}

pub fn fetch(&mut self) -> Option<Arc<TaskControlBlock>> {

let next_pid = loop {

if let Some(next_pid) = self.scheduler.find_next_task() {

// TODO how about wait state

if self.ready_queue.contains_key(&next_pid){

break next_pid

}

} else {

return None;

}

};

let (_, task) = self.ready_queue.remove_entry(&next_pid).unwrap();

Some(task)

}

}