Well I admit that I am too lazy to transfer this article back to Chinese.

I am going to practice my operating system skills by learning through the rCore-OS of THU, which is a pretty OS written in Rust. It is Linux compatible and its target platform is RISC-V. In this article, we will build a very naive but bare metal program.

0x00 Get rid of standard library dependencies

This is the first challenge for any software developer start moving to system development: You can not rely on ANY standard libraries (glibc, uclibc, klibc or any other implementations), since the OS itself is the one responsible for providing these libs. Let’s try to get rid of them.

In Rust and C/C++ (and almost all programming languages), before running into main(), the execution environment will do some initialization work, where the std library and other standard libraries (GNU libc) may be used. Thus we have to tell Cargo there is no main and std in our target.

// os/src/main.rs

#![no_std]

#![no_main]

And we need to explicitly write a _start() function, which is the entry Cargo is looking for.

// os/src/main.rs

#[no_mangle]

extern "C" fn _start() {

// Nothing here now

}

Besides, Cargo requires us to provide panic_handler or it will not compile. Usually the std will take care of that but now we have to manually add a panic_handler.

// os/src/lang_items.rs

use core::panic::PanicInfo;

#[panic_handler]

fn panic(_info: &PanicInfo) -> ! {

// Nothing here now

}

Note that the rust-core can be used (and very useful) on bare metal.

Next, we need to make it possible to run our program directly on CPU without any OS support.

0x01 Make the CPU run it

For an odinary program, running it is easy: All you have to do is type it’s name in a shell and hit Enter, or double-click the exe file in Windows. That ease is benefiting from the OS. As we are creating an OS, things can get a little more complicated. Let’s first think about what will happen when the CPU starts to working.

The bootloadr for QEMU can be found at: https://github.com/itewqq/rCore-dev/tree/main/bootloader

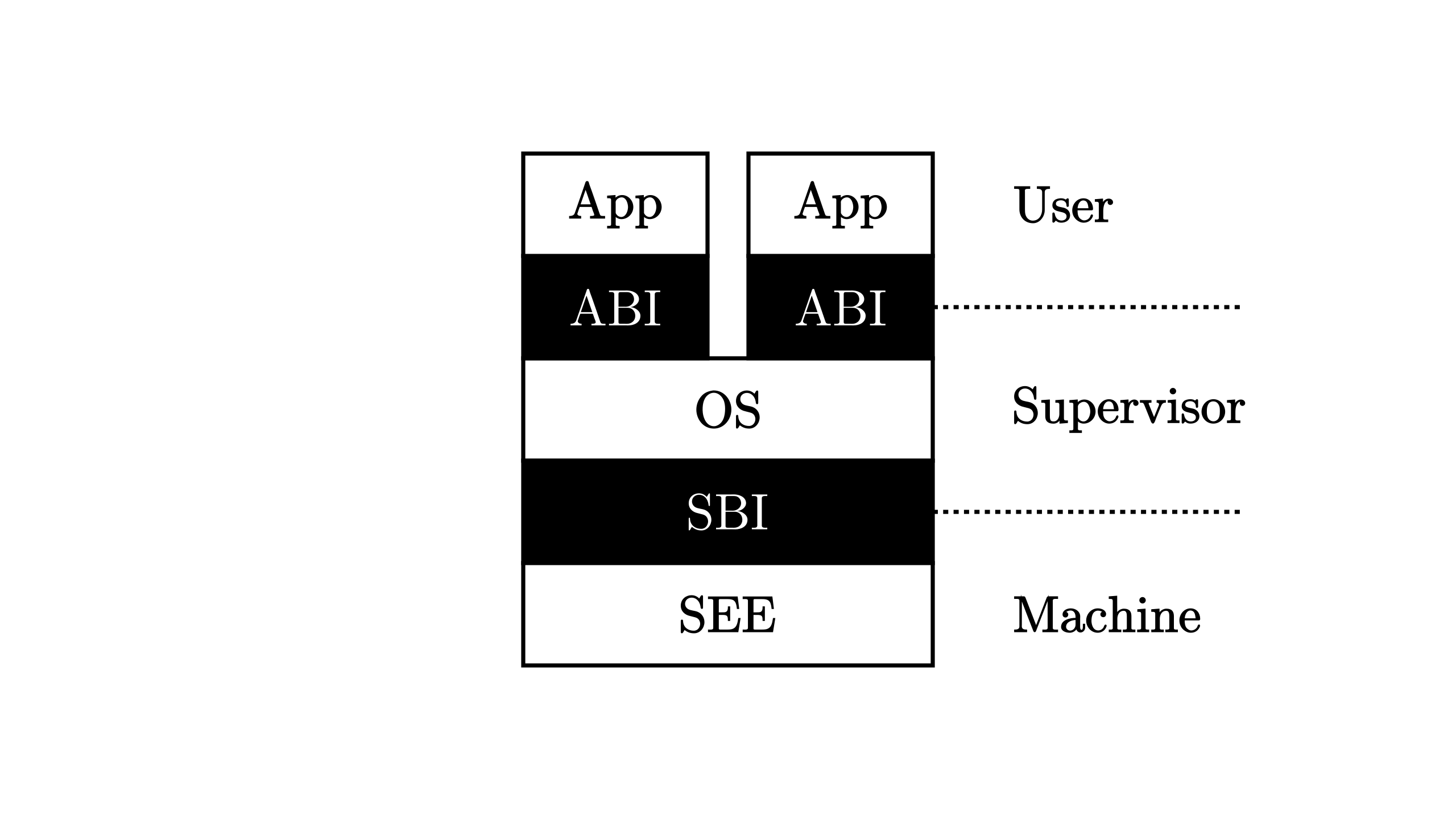

When the CPU (riscv64 emulated by QEMU in our case) is powered on, the other general registers of the CPU are cleared to zero, and the PC register will point to the 0x1000 location. This 0x1000 location is the first instruction executed after the CPU is powered up (a small piece of boot code solidified in the hardware), and it will quickly jump to 0x80000000, which is the first instruction of the BootLoader program – RustSBI. After the basic hardware initialization, RustSBI will jump to the operating system binary code memory location 0x80200000 (for QEMU) and execute the first instruction of the operating system. Then our written operating system starts to work.

About the SBI: SBI is an underlying specification for RISC-V. The relationship between the operating system kernel and RustSBI, which implements the SBI specification, is somewhat like the relationship between an application and the operating system kernel, with the latter providing certain services to the former. However, SBI provides few services and can help the OS kernel to perform limited functions, but these functions are very low-level and important, such as shutting down the computer, displaying strings, and so on. If RustSBI provides services, then the OS kernel can call them directly.

So it’s clear that we have to put our built OS at the 0x80200000 address (for QEMU). By default, Cargo adopts a usermode memory layout which is not we expected, for example we will not get a entry address at 0x80200000 in the generated binary. To address that we need a custom linker script to make every section’s location right:

OUTPUT_ARCH(riscv)

ENTRY(_start)

BASE_ADDRESS = 0x80200000;

SECTIONS

{

. = BASE_ADDRESS;

skernel = .;

stext = .;

.text : {

*(.text.entry)

*(.text .text.*)

}

. = ALIGN(4K);

etext = .;

srodata = .;

.rodata : {

*(.rodata .rodata.*)

*(.srodata .srodata.*)

}

. = ALIGN(4K);

erodata = .;

sdata = .;

.data : {

*(.data .data.*)

*(.sdata .sdata.*)

}

. = ALIGN(4K);

edata = .;

.bss : {

*(.bss.stack)

sbss = .;

*(.bss .bss.*)

*(.sbss .sbss.*)

}

. = ALIGN(4K);

ebss = .;

ekernel = .;

/DISCARD/ : {

*(.eh_frame)

}

}

Then we force Cargo to use it in linking:

// os/.cargo/config

[build]

target = "riscv64gc-unknown-none-elf"

[target.riscv64gc-unknown-none-elf]

rustflags = [

"-Clink-arg=-Tsrc/linker.ld", "-Cforce-frame-pointers=yes"

]

继续阅读“rCore-OS Lab1: A Trilobite OS”